Europe’s Forthcoming AI Act Will Have a Wide Reach and Broad Implications

by Fintechnews Switzerland August 8, 2022Like the European Union (EU)’s General Data Protection Regulation (GDPR) that entered into force in 2016, the upcoming Artificial Intelligence (AI) Act will have extraterritorial scope and global impact.

Considering the AI Act’s broad scope and the financial risks relating to non-compliance, businesses must prepare for these future regulatory changes now and proactively take the initiatives to comply with best practices early on, according to a new whitepaper by Swiss data services company Unit8.

The paper, titled Upcoming AI Regulation: What to expect and how to prepare, delves into the EU’s forthcoming AI Act, providing insights into the future development of AI regulation in Europe and the potential implications for organizations worldwide.

The European Commission (EC) unveiled a proposal for a legal framework on AI in April 2021, seeking to address risks of specifically created by AI applications, proposing a list of high risk applications, setting clear requirements for AI systems for high risk applications and defining specific obligations for AI users and providers of high risk applications.

The proposed rules also propose a conformity assessment method for AI systems, propose enforcement after an AI system is placed in the market, and propose a governance structure at European and national level.

The legislation, which is expected to be implemented within the next two years, will apply to AI systems used or placed in the EU market, irrespective of whether the providers are based within or outside the EU. Obligations and requirements will not only be addressed to providers of AI systems, but also to stakeholders that use those systems or that are part of the value chain, such as manufacturers, importers and distributors, showcasing the wide reach of the regulation.

The AI Act will be complex and demanding, requiring businesses to proactive start preparing for the new rules early on. In particular, firms should start building up the required competencies, and establish partnerships with domain experts to ensure legal compliance, Unit8 recommends.

One area worth exploring is to establish a strong AI governance framework that would ensure that one’s AI investments are compliant from the get-go and that teams have strong guiding principles to develop AI systems, Unit8 advises. This would reduce refactoring costs and foster more effective AI development, it says.

In addition to a robust AI framework, organizations should also implement an effective AI risk management framework and team up with the right partners to conduct independent assessments and reviews.

Remaining compliant with the AI Act will be crucial for businesses, especially when considering the hefty financial penalties organizations that fail to comply will be exposed to, Unit8 warns. Violations of data governance requirements or non-compliance with the bans related to AI systems posing an unacceptable risk will be fined up to EUR 30 million or 6% of global annual company revenue.

Non-compliance with other provisions will be fined up to EUR 20 million or 4% of global annual company revenue, while providing wrong or misleading information to authorities can lead to up to EUR 10 million in fines or 2% of global annual revenue.

AI regulation efforts accelerate

The EC’s AI Act is the first law on AI to be proposed by a major regulator. The legislation has already started making waves internationally. In September 2021, Brazil’s Congress approved the AI Bill, a legal framework for AI.

Should it be signed into law, the bill will establish principles, duties and guidelines for developing and applying AI in the country. The bill will also harmonize with other important Brazilian legislation, such as the Brazilian Data Protection Law and the Brazilian Consumer Protection Code.

Just two months ago, the Canadian government introduced Bill C-27, a continuation of the government’s initiative to reform federal private sector privacy law. One of the key new elements in Bill C-27 is the proposed AI and Data Act (AIDA), which aims to regulate the development and encourage the responsible use of AI in the country.

Meanwhile, progress in China has moved significantly faster. In March, a new regulation governing the way online recommendations are generated through algorithms went into effect. The policy mandates companies to inform users if an algorithm is being used to display certain information to them. Consumers must be able to opt out of being targeted if they want to.

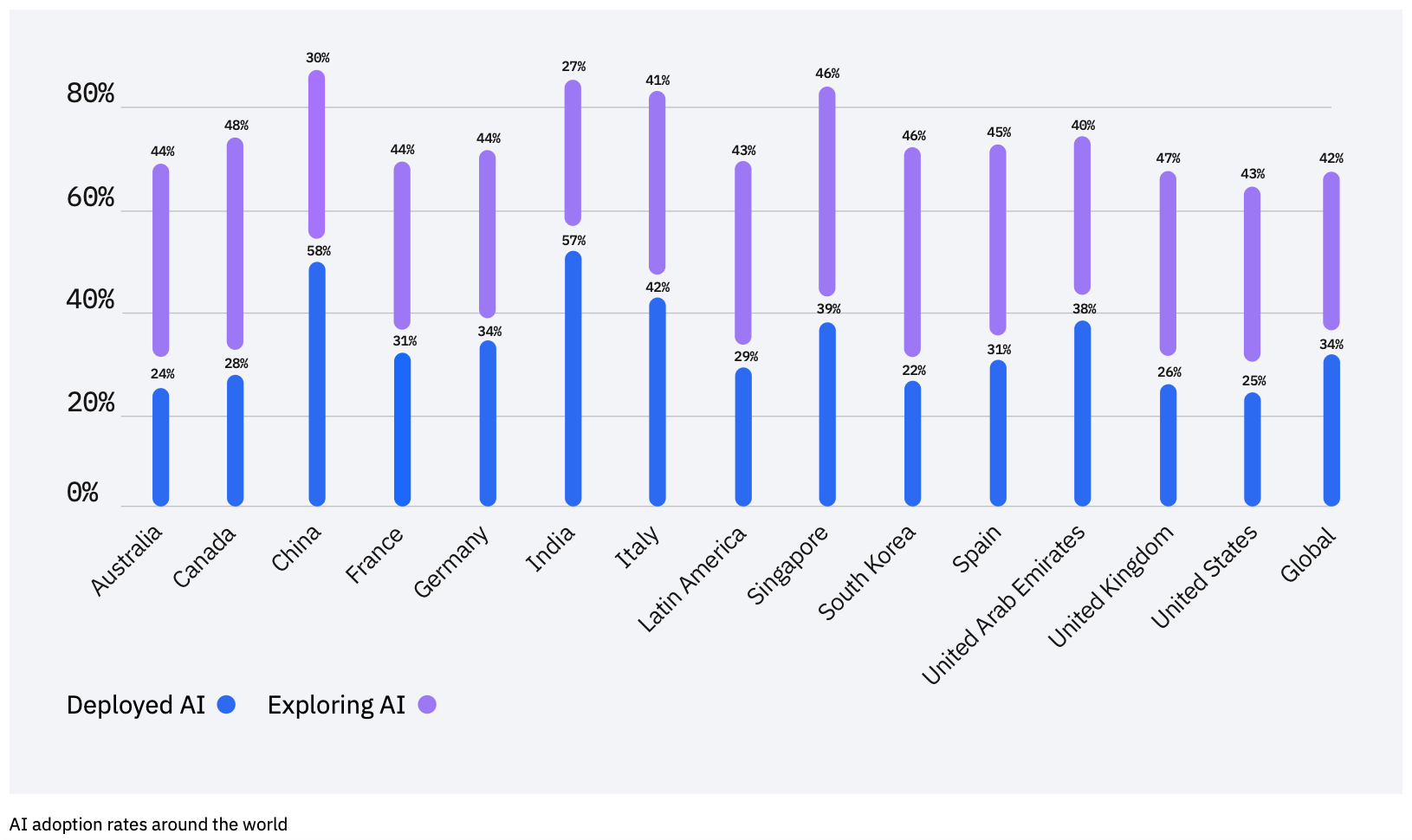

Global adoption of AI has considerably increased over the past years. A 2022 study conducted by Morning Consult on behalf of IBM found that 35% of companies are now using AI, a four-point increase from the year before. 42% of companies indicated exploring AI.

Chinese and Indian companies were found to be the biggest adopters of AI, with nearly 60% of IT professionals in those countries saying their organization already actively uses AI, compared with lagging markets like South Korea (22%), Australia (24%) the US (25%), and the UK (26%).

AI adoption rates around the world, Source: IBM Global AI Adoption Index 2022

Featured image credit: Edited from Freepik and Unsplash